How Data Dimension Change In Convolutional Network

This article was published as a part of the Data Science Blogathon

Introduction

Reckoner Vision is evolving rapidly mean solar day-by-day. When we talk well-nigh Computer Vision, the term Convolutional Neural Networks (abbreviated equally CNN) comes into our mind because CNN is heavily used here.

Therefore it becomes necessary for every aspiring Data Scientist and Motorcar Learning Engineer to have a good cognition of these Neural Networks.

In this commodity, we will discuss the most important questions on the Convolutional Neural Networks(CNNs)which is helpful to get yous a clear understanding of the techniques, and likewise for Data Science Interviews, which covers its very primal level to complex concepts.

Let's get started,

one. What do you mean by Convolutional Neural Network?

A Convolutional neural network (CNN, or ConvNet) is another type of neural network that can exist used to enable machines to visualize things.

CNN'southward are used to perform analysis on images and visuals. These classes of neural networks can input a multi-channel image and work on information technology easily with minimal preprocessing required.

These neural networks are widely used in:

- Image recognition and Image nomenclature

- Object detection

- Recognition of faces, etc.

Therefore, CNN takes an paradigm as an input, processes it, and classifies information technology nether certain categories.

Epitome Source: Google Images

2. Why do nosotros prefer Convolutional Neural networks (CNN) over Bogus Neural networks (ANN) for image information as input?

1. Feedforward neural networks can larn a single feature representation of the paradigm but in the case of complex images, ANN will neglect to give better predictions, this is because it cannot learn pixel dependencies nowadays in the images.

2. CNN tin learn multiple layers of characteristic representations of an image by applying filters, or transformations.

3. In CNN, the number of parameters for the network to learn is significantly lower than the multilayer neural networks since the number of units in the network decreases, therefore reducing the chance of overfitting.

iv. Likewise, CNN considers the context data in the small neighborhood and due to this characteristic, these are very of import to achieve a amend prediction in information similar images. Since digital images are a bunch of pixels with high values, it makes sense to use CNN to analyze them. CNN decreases their values, which is ameliorate for the training phase with less computational power and less information loss.

3. Explicate the dissimilar layers in CNN.

The unlike layers involved in the architecture of CNN are every bit follows:

1. Input Layer: The input layer in CNN should contain prototype data. Epitome data is represented by a three-dimensional matrix. We accept to reshape the image into a unmarried column.

For Case, Suppose we have an MNIST dataset and you have an epitome of dimension 28 x 28 =784, yous demand to catechumen it into 784 ten 1 before feeding it into the input. If we accept "1000" training examples in the dataset, then the dimension of input will be (784, thousand).

two. Convolutional Layer: To perform the convolution performance, this layer is used which creates several smaller picture windows to go over the data.

3. ReLU Layer: This layer introduces the not-linearity to the network and converts all the negative pixels to zero. The final output is a rectified feature map.

four. Pooling Layer: Pooling is a down-sampling operation that reduces the dimensionality of the feature map.

5. Fully Connected Layer: This layer identifies and classifies the objects in the image.

6. Softmax / Logistic Layer: The softmax or Logistic layer is the last layer of CNN. It resides at the end of the FC layer. Logistic is used for binary nomenclature problem argument and softmax is for multi-classification trouble statement.

7. Output Layer: This layer contains the label in the form of a i-hot encoded vector.

4. Explain the significance of the RELU Activation role in Convolution Neural Network.

RELU Layer – Afterwards each convolution functioning, the RELU functioning is used. Moreover, RELU is a non-linear activation function. This operation is applied to each pixel and replaces all the negative pixel values in the feature map with zero.

Usually, the image is highly non-linear, which means varied pixel values. This is a scenario that is very difficult for an algorithm to make correct predictions. RELU activation function is applied in these cases to decrease the not-linearity and make the chore easier.

Therefore this layer helps in the detection of features, decreasing the non-linearity of the image, converting negative pixels to zero which also allows detecting the variations of features.

Therefore non-linearity in convolution(a linear operation) is introduced by using a non-linear activation role similar RELU.

![16: Rectified Linear Unit [94]. | Download Scientific Diagram | CNN questions](https://www.researchgate.net/profile/Saad-Albawi/publication/328048988/figure/fig17/AS:677675828011008@1538581916318/Figure-316-Rectified-Linear-Unit-94.jpg)

Image Source: Google Images

5. Why do we use a Pooling Layer in a CNN?

CNN uses pooling layers to reduce the size of the input image and then that it speeds upward the computation of the network.

Pooling or spatial pooling layers: Likewise called subsampling or downsampling.

- It is practical after convolution and RELU operations.

- It reduces the dimensionality of each characteristic map by retaining the well-nigh important information.

- Since the number of subconscious layers required to learn the complex relations present in the image would be big.

As a consequence of pooling, fifty-fifty if the picture were a piffling tilted, the largest number in a certain region of the characteristic map would have been recorded and hence, the feature would have been preserved. As well as another benefit, reducing the size past a very significant amount volition use less computational power. So, it is as well useful for extracting dominant features.

six. What is the size of the feature map for a given input size image, Filter Size, Footstep, and Padding amount?

Pace tells united states about the number of pixels we will leap when nosotros are convolving filters.

If our input prototype has a size of north x n and filters size f ten f and p is the Padding amount and due south is the Stride, then the dimension of the characteristic map is given past:

Dimension = floor[ ((northward-f+2p)/s)+1] x floor[ ((n-f+2p)/s)+ane]

7. An input image has been converted into a matrix of size 12 X 12 forth with a filter of size 3 Ten 3 with a Stride of ane. Determine the size of the convoluted matrix.

To calculate the size of the convoluted matrix, we use the generalized equation, given by:

C = ((n-f+2p)/s)+1

where,

C is the size of the convoluted matrix.

n is the size of the input matrix.

f is the size of the filter matrix.

p is the Padding amount.

s is the Stride applied.

Here due north = 12, f = three, p = 0, s = one

Therefore the size of the convoluted matrix is 10 X ten.

viii. Explain the terms "Valid Padding" and "Same Padding" in CNN.

Valid Padding: This type is used when in that location is no requirement for Padding. The output matrix after convolution volition accept the dimension of (n – f + 1) Ten (n – f + 1).

Same Padding: Hither, we added the Padding elements all around the output matrix. Subsequently this type of padding, we will go the dimensions of the input matrix the same equally that of the convolved matrix.

Afterward Same padding, if we use a filter of dimension f x f to (n+2p) x (n+2p) input matrix, then nosotros volition get output matrix dimension (due north+2p-f+ane) x (northward+2p-f+1). As we know that later applying Padding we volition get the same dimension as the original input dimension (n 10 northward). Hence nosotros have,

(n+2p-f+1)ten(north+2p-f+1) equivalent to nxn

n+2p-f+one = northward

p = (f-one)/2

So, past using Padding in this mode we don't lose a lot of information and the image also does not shrink.

9. What are the different types of Pooling? Explain their characteristics.

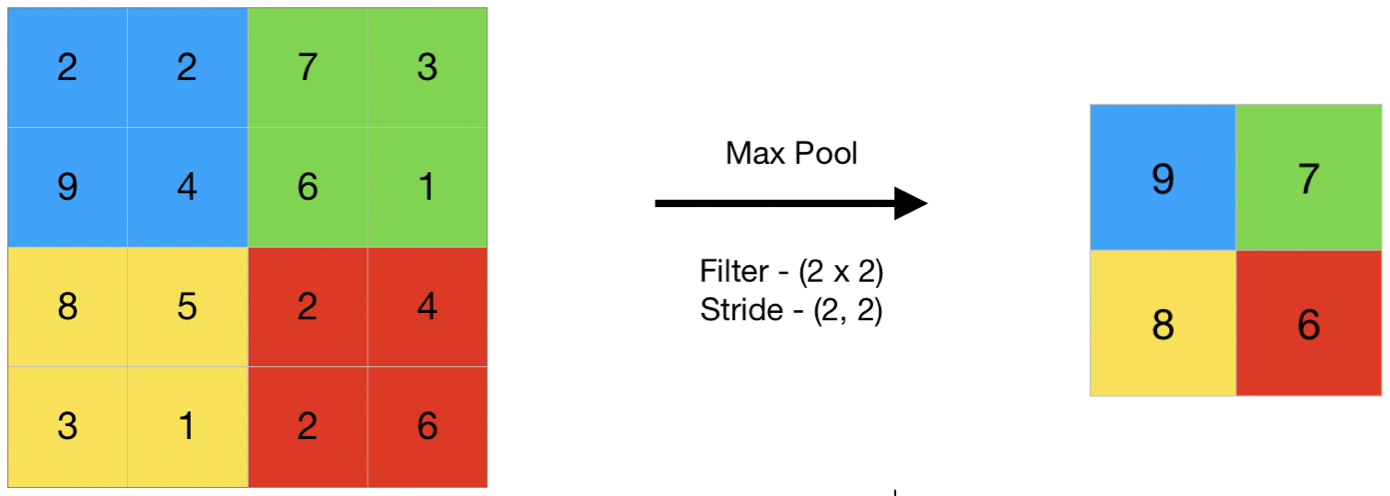

Spatial Pooling can exist of different types –max pooling, average pooling, and Sum pooling.

- Max pooling: Once we obtain the characteristic map of the input, we will use a filter of determined shapes across the feature map to get the maximum value from that portion of the characteristic map. It is also known as subsampling because from the entire portion of the characteristic map covered by filter or kernel we are sampling one single maximum value.

- Average pooling: Computes the average value of the feature map covered past kernel or filter, and takes the floor value of the result.

- Sum pooling: Computes the sum of all elements in that window.

Characteristics:

Max pooling returns the maximum value of the portion covered by the kernel and suppresses the Noise, while Average pooling only returns the measure of that portion.

The about widely used pooling technique is max pooling since it captures the features of maximum importance with it.

Image Source: Google Images

10. Does the size of the feature map e'er reduce upon applying the filters? Explain why or why not.

No, the convolution operation shrinks the matrix of pixels(input image) only if the size of the filter is greater than 1 i.e, f > 1.

When we apply a filter of 1×1, so in that location is no reduction in the size of the image and hence there is no loss of information.

xi. What is Footstep? What is the effect of high Stride on the feature map?

Step refers to the number of pixels past which nosotros slide over the filter matrix over the input matrix. For example –

- If Pace =1, and then move the filter ane pixel at a time.

- If Pace=2, then motility the filter two-pixel at a fourth dimension.

Moreover, larger Strides will produce a smaller feature map.

12. Explicate the role of the flattening layer in CNN.

Later a series of convolution and pooling operations on the feature representation of the image, we and then flatten the output of the terminal pooling layers into a single long continuous linear assortment or a vector.

The procedure of converting all the resultant 2-d arrays into a vector is called Flattening.

Flatten output is fed equally input to the fully continued neural network having varying numbers of hidden layers to learn the not-linear complexities present with the feature representation.

thirteen. List down the hyperparameters of a Pooling Layer.

The hyperparameters for a pooling layer are:

- Filter size

- Stride

- Max or boilerplate pooling

If the input of the pooling layer is nh 10 northwardw x nc, then the output will be –

Dimension = [ {(nh – f) / due south + 1}* {(due northwestward – f) / due south + ane}* northc']

xiv. What is the function of the Fully Connected (FC) Layer in CNN?

The aim of the Fully continued layer is to utilise the high-level feature of the input paradigm produced by convolutional and pooling layers for classifying the input image into various classes based on the training dataset.

Fully connected means that every neuron in the previous layer is connected to each and every neuron in the next layer. The Sum of output probabilities from the Fully connected layer is 1, fully connected using a softmax activation role in the output layer.

The softmax function takes a vector of arbitrary existent-valued scores and transforms it into a vector of values between 0 and 1 that sums to one.

Working

It works like an ANN, assigning random weights to each synapse, the input layer is weight-adjusted and put into an activation function. The output of this is then compared to the truthful values and the error generated is dorsum-propagated, i.e. the weights are re-calculated and repeat all the processes. This is washed until the fault or cost role is minimized.

15. Briefly explain the two major steps of CNN i.e, Characteristic Learning and Classification.

Feature Learning deals with the algorithm by learning nigh the dataset. Components like Convolution, ReLU, and Pooling piece of work for that, with numerous iterations between them. Once the features are known, then nomenclature happens using the Flattening and Full Connection components.

Image Source: Google Images

xvi. What are the issues associated with the Convolution functioning and how can 1 resolve them?

As we know, convolving an input of dimensions 6 X 6 with a filter of dimension three X three results in the output of four X 4 dimension. Let's generalize the thought:

We can generalize it and say that if the input is n X due north and the Filter Size is f Ten f, and then the output size volition exist (north-f+1) X (n-f+1):

- Input: northward X n

- Filter size: f X f

- Output: (n-f+i) X (north-f+1)

There are primarily two disadvantages hither:

- When nosotros utilise a convolutional functioning, the size of the paradigm shrinks every time.

- Pixels present in the corner of the image i.east, in the edges, are used only a few times during convolution equally compared to the central pixels. Hence, we do not focus also much on the corners and so information technology tin atomic number 82 to information loss.

To overcome these problems, we tin use the padding to the images with an boosted border, i.due east., nosotros add one pixel all effectually the edges. This ways that the input will exist of the dimension viii X 8 instead of a half-dozen 10 6 matrix. Applying convolution on the input of filter size iii X 3 on it volition result in a 6 X 6 matrix which is the aforementioned as the original shape of the image. This is where Padding comes into the picture:

Padding: In convolution, the functioning reduces the size of the image i.e, spatial dimension decreases thereby leading to information loss. As we keep applying convolutional layers, the size of the volume or feature map will subtract faster.

Zip Paddings allow u.s.a. to control the size of the feature map.

Padding is used to make the output size the same as the input size.

Padding amount = number of rows and columns that we volition insert in the tiptop, bottom, left, and right of the image. After applying padding,

- Input: n Ten north

- Padding: p

- Filter size: f X f

- Output: (n+2p-f+i) X (n+2p-f+i)

17. Let united states of america consider a Convolutional Neural Network having three unlike convolutional layers in its architecture as –

Layer-one: Filter Size – 3 X 3, Number of Filters – ten, Stride – 1, Padding – 0

Layer-2: Filter Size – 5 X v, Number of Filters – 20, Stride – 2, Padding – 0

Layer-iii: Filter Size – 5 X5 , Number of Filters – 40, Footstep – 2, Padding – 0

If nosotros requite the input a 3-D image to the network of dimension 39 X 39, so determine the dimension of the vector after passing through a fully connected layer in the architecture.

Here we take the input image of dimension 39 10 39 X 3 convolves with 10 filters of size 3 X 3 and takes the Stride as 1 with no padding. After these operations, we will get an output of 37 X 37 X 10.

We and then convolve this output further to the next convolution layer as an input and get an output of seven X seven X 40. Finally, by taking all these numbers (7 X vii X forty = 1960), and and so unroll them into a big vector, and laissez passer them to a classifier that volition make predictions.

18. Explicate the significance of "Parameter Sharing" and "Sparsity of connections" in CNN.

Parameter sharing: In convolutions, we share the parameters while convolving through the input. The intuition behind this is that a feature detector, which is useful in one role of the image may also exist useful in some other function of the image. So, by using a unmarried filter we convolved all the unabridged input and hence the parameters are shared.

Let's sympathize this with an case,

If we would have used just the fully connected layer, the number of parameters would exist = 32*32*3*28*28*half-dozen, which is nearly equal to 14 million which makes no sense.

Only in the case of a convolutional layer, the number of parameters volition be = (v*5 + i) * 6 (if there are 6 filters), which is equal to 156. Convolutional layers, therefore, reduce the number of parameters and speed up the training of the model significantly.

The sparsity of Connections: This implies that for each layer, each output value depends on a minor number of inputs, instead of taking into account all the inputs.

19. Explain the role of the Convolution Layer in CNN.

Convolution is a linear operation of a smaller filter to a larger input that results in an output feature map.

Convolution layer: This layer performs an performance chosen a convolution, hence the network is chosen a convolutional neural network. It extracts features from the input images. Convolution is a linear operation that involves the multiplication of a fix of weights with the input.

This technique was designed for 2d-input(array of data). The multiplication is performed betwixt an array of input information and a 2d array of weights called a filter or kernel.

This is the component that detects features in images preserving the human relationship between pixels by learning image features using pocket-size squares of input data i.e, respecting their spatial boundaries.

xx. Tin can we utilize CNN to perform Dimensionality Reduction? If Yes then which layer is responsible for dimensionality reduction particularly in CNN?

Aye, CNN does perform dimensionality reduction. A pooling layer is used for this.

The main objective of Pooling is to reduce the spatial dimensions of a CNN. To reduce the spatial dimensionality, it will perform the downward-sampling operations and creates a pooled feature map past sliding a filter matrix over the input matrix.

Give-and-take Problem

Let united states consider a Convolutional Neural Network having two unlike Convolutional Layers in the Architecture i.east,

Layer-1: Filter Size: five X five, Number of Filters: vi, Stride-i, Padding-0, Max-Pooling: (Filter Size: 2 X 2 with Stride-2)

Layer-2: Filter Size: 5 X 5, Number of Filters: xvi, Stride-i, Padding-0, Max-Pooling: (Filter Size: two X 2 with Step-ii)

If we requite a 3-D image as the input to the network of dimension 32 Ten 32, then the dimension of the vector after passing through a flattening layer in the architecture is _____?

End Notes

Thank you for reading!

I hope y'all enjoyed the questions and were able to test your knowledge about Convolutional Neural Networks.

If you liked this and desire to know more, go visit my other articles on Information Scientific discipline and Automobile Learning by clicking on the Link

Please feel complimentary to contact me on Linkedin, Email.

Something not mentioned or desire to share your thoughts? Feel free to annotate beneath And I'll get back to you.

Well-nigh the author

Chirag Goyal

Currently, I am pursuing my Available of Technology (B.Tech) in Reckoner Science and Engineering science from the Indian Institute of Applied science Jodhpur(IITJ). I am very enthusiastic about Auto learning, Deep Learning, and Bogus Intelligence.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author'due south discretion.

Source: https://www.analyticsvidhya.com/blog/2021/05/20-questions-to-test-your-skills-on-cnn-convolutional-neural-networks/

Posted by: cameronandso1947.blogspot.com

0 Response to "How Data Dimension Change In Convolutional Network"

Post a Comment